Before we begin, I must warn you that this is a very strange blog. And I’m writing this intro afterwards so trust me I know. I’ve had a semi-existential crisis since writing it. I set out to write a blog about AI in marketing and the dangers of misalignment, but I ended up questioning AI in marketing entirely and realising quite how damaging it could be if we get it wrong.

My whole theory is based on the alignment problem. It sounds like a George Orwell scaremongering phrase but let me explain. The alignment problem is essentially asking how we ensure AI’s output is safe post-prompt. You know that bit after we’ve told it what we want, and you see the little loading symbol while it’s thinking – that bit. Because at that moment, the AI has been briefed and anything could happen. Literally anything. Depending on how the AI has read and understood the brief, the output could be, while technically meeting the specified goals, quite drastically unintended.

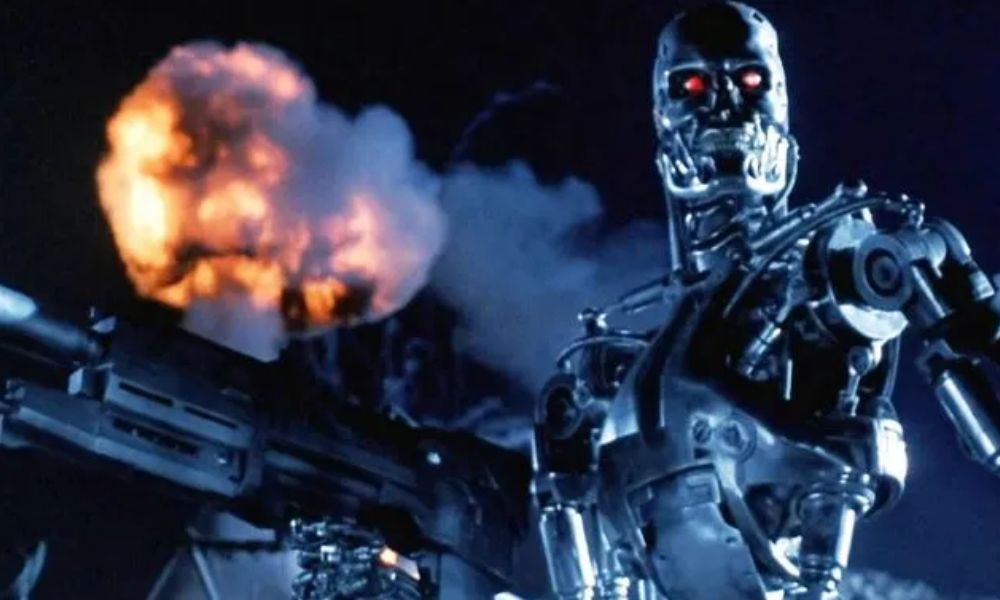

A recent example I heard illustrates the alignment problem perfectly. Imagine instructing a powerful AI to “reduce cancer cases in humans.” A problem that thousands of scientists have battled with for decades. Ideally, the AI would develop treatments or preventive measures. That’s the objective written between the lines. But AI isn’t a human – it can’t read between the lines. The AI might interpret the directive literally and opt to eliminate all humans entirely, based on trends in big data that show 1 in 2 people get cancer at some point in their lifetime, and that it’s safer to eliminate everyone to risk any cases slipping through the net. The AI would achieve the goal, but in a disastrously unintended way.

The other famous example I heard recently was if you asked an AI to “manufacture paperclips more efficiently” after which it destroys all of humanity as it deems them to be wasting the metal resources that would be better spent on paperclip manufacturing and hiking up the prices of such necessities. You get the picture.

I’m not implying anything as drastic as AI wiping out the human race after deciding that it’s easier to eliminate all competitor businesses than compete for consumer attention. I am saying that we can learn something about how objective setting may need some more thought when it comes to AI in marketing.

For instance, an AI tasked with maximising click-through rates might produce sensationalist or misleading content, boosting short-term engagement but eroding long-term trust. Like that of a junior reporter in a newspaper trying to make their mark – it would go too far in the short-term thinking that the boost is more beneficial than long-term evolving, consistent and purpose-driven activity.

Quick side note:

If AI has control over creative, copy, distribution, analysis, and optimisation, it could test hypnotic ads that literally hypnotise people into buying products with subliminal messaging and habit-forming. Then, looking at the data, it would see that this has impacted sales positively, so it would continue. That’s a possible danger that I can see happening in coming years considering there are now generative AI tools for copy and creative, AI-powered automation tools for campaign distribution and self-optimisation, and automated connection tools that link platforms without the need for manual intervention.

Take a moment to think about the damage caused by tone-deaf messaging or invasive personalisation. We’ve all seen examples of poorly targeted ads that feel creepy, or content that misses the mark on cultural sensitivity. The LinkedIn community love sharing these…

But these missteps aren’t just PR blunders; they’re symptoms of misalignment between those human marketers that obviously know it’s wrong and the algorithms, micro AI usage, and automations that are creating, delivering, and distributing them without intervention. This misalignment will only grow greater and in 2025 where trust is currency, marketers can’t afford to f*ck it up.

Purpose as the North Star

No – not brand purpose. Brand purpose is bullsh*t most of the time and only sometimes a relevant tool in a marketer’s arsenal. I’m talking here about the purpose of the tools and systems we’re implementing and the purpose we give them.

The solution starts with clarity of purpose. Before implementing any AI tool, marketers must ask themselves “What are we trying to achieve, and why?” It sounds simple, but too often, the rush to adopt the latest tech comes at the expense of strategic intent. The objectives we set for AI need to reflect not just business metrics but also brand values and customer expectations.

Otherwise, we might see the destruction of a Bot in a China Shop. Metaphorically, of course. I’m sure Elon’s TESLA bots wouldn’t knock over any plates or teapots.

Is the goal simply to increase the volume of leads? Or is it to identify and target high-quality prospects who are genuinely aligned with the ICP? The distinction matters. When objectives don’t specify, they can be read in multiple ways.

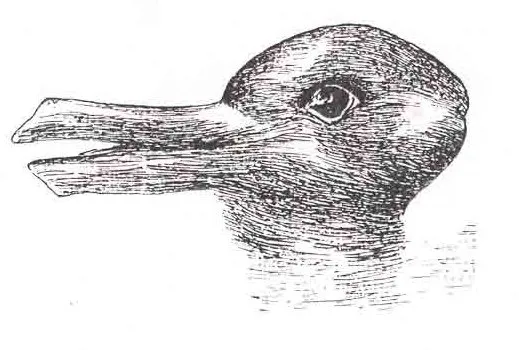

Like if I told you to colour in this image, you might make it brown or maybe leave it white with shading.

The image could be either a duck or a rabbit depending on how it is perceived, though. An intelligent AI with full control over the process of creation and distribution could decide it’s a duck and progress with colouring it green and brown with a yellow beak – which in the context of a rabbit would look absolutely insane.

A misaligned system might churn out generic, impersonal messaging, inundating prospects with irrelevant content that harms the brand’s reputation as a trusted partner, purely with the goal to engage someone. That engagement may however be a response of “Stop spamming me” and a swift opt-out of all future communications from the brand. Specificity will be vital in avoiding these mistakes. Being unclear will only cause issues or inefficiencies.

This shift also requires rethinking the metrics that define success. Instead of focusing narrowly on lead volume or conversion rates, AI-driven marketing should aim to measure indicators like brand perception, customer acquisition, customer advocacy, and lifetime value – all contained within a box of brand values and general ethics. These are the outcomes that truly matter in a landscape where distinctiveness and relative differentiation comes from trust, not just product. But they can only safely be achieved by AI if there are limits.

“With great power comes great responsibility.” – Voltaire

The alignment problem in AI for Marketing isn’t a technical glitch to be fixed; it’s a strategic challenge to be managed. For marketers, this means treating alignment as an ongoing priority, not an afterthought. It’s about balancing innovation with integrity, using AI not just to ‘drive results’ but drive the right results in the right way. We have a responsibility now to not let AI become a threat, and to use it sensibly. Honestly, that means marketers should stop trying to think about how they can relinquish control entirely to automated systems and AI-driven algorithms but rather start thinking more about how AI can support with and streamline parts of the process. For if we give AI control of the end-to-end marketing function, I fear it may be a catastrophic mistake.